Defamation in the Age of Deepfakes: How South Park Could Influence Insurance Exposure

by Lauren Gonzalez

In July 2025, South Park returned for its 27th season with an episode titled “Sermon on the 'Mount.” The episode featured a crude, deepfaked version of Donald Trump delivering a surreal public service announcement as part of a fictional defamation settlement with the town’s citizens. The timing drew notice because South Park’s parent company, Paramount, had just resolved a real defamation dispute with Trump. Allegations surfaced that as part of the settlement, Trump’s legal team even pushed for editorial concessions aimed at limiting negative portrayals across Paramount’s platforms.

Regardless of the truth of these allegations, this blurring of parody and actual legal pressure underscores a larger problem: what happens when satire intersects with the emerging risks of synthetic media? Deepfakes—AI-generated video or audio that fabricates realistic depictions—make it increasingly difficult to separate humor from harm. For insurers, that uncertainty complicates questions of what’s real, what’s damaging, and what’s covered.

The issue is not confined to comedy. In Maryland, a former principal sued after a deepfake audio clip allegedly created by a colleague falsely portrayed him making racist remarks. Despite expert findings that the audio was likely AI-generated, the district took no corrective action, leading to defamation and negligence claims.

As synthetic media collides with defamation litigation and reputational harm, what once would have been dismissed as satire is now entangled with high-stakes settlement strategy, putting claims teams directly in the crossfire.

The Claims Environment Is Changing. Rapidly and Materially.

Deepfake content is no longer confined to fringe conspiracy or internet culture. As of late 2024, insurers are encountering synthetically manipulated material in first-party and third-party claims alike. According to Swiss Re’s 2025 SONAR report, insurers are flagging increasing volumes of altered video footage, doctored PDFs, and falsified injury documentation in liability cases.

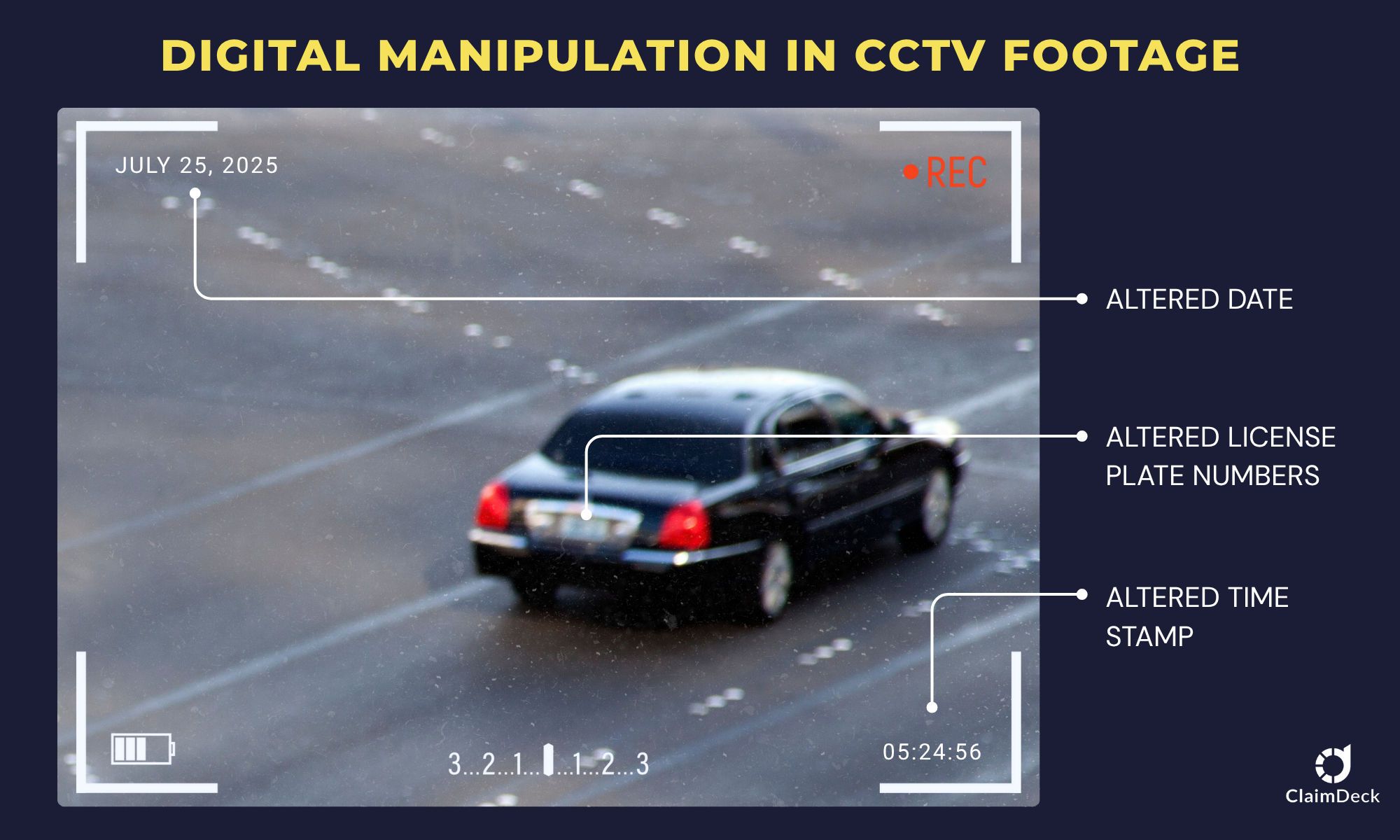

This concern is echoed in the legal sector. A November 2024 report by Kennedys Law described a personal injury claim where CCTV footage was digitally manipulated—altering the date, time, and vehicle registration—before being exposed through forensic review. It underscores how AI-generated misleading content is more than a novelty: the World Economic Forum ranked disinformation, fueled by deepfakes, as a top global risk to public trust and information accuracy.

Additional legal commentary reinforces the growing uncertainty around how deepfakes will be treated under defamation law. An October 2024 article from the Wake Forest Journal of Law and Policy highlights two key challenges: first, courts have yet to definitively rule on whether AI-generated images and videos qualify as “false statements” under defamation standards; and second, the anonymous nature of AI content creation makes identifying liable parties difficult and expensive. The article notes that while reputational harm is real, deepfakes often fall into legal gray zones, blurring the line between deception and protected speech under the First Amendment.

Such cases are no longer novel; they’re systemic. They underline the urgent need for metadata validation, forensic workflows, and structured evidentiary review at first notice of loss. Insurers can no longer afford to treat submitted media, whether it’s video, audio, or visual documentation, as inherently trustworthy, especially when claim liability hinges on visual records or reputational inference.

The implications for insurers go well beyond detection. The rise of synthetic media creates volatility across the entire claims lifecycle:

Triage becomes slower and more labor-intensive, requiring adjusters to consult legal, communications, and forensic teams earlier and more frequently.

Liability assessment grows murkier, as standard evidence may now require technical authentication before it can be treated as credible.

Reserving practices are strained. Carriers must account for the possibility that a claim based on a deepfake could be legitimate or fabricated and this uncertainty inflates reserves, especially in high-profile or media-sensitive cases.

Litigation timelines lengthen. With courts still undecided on admissibility standards and the legal definitions of AI-generated content, claims are increasingly entangled in procedural questions that delay resolution.

Reputational risk compounds. If a fraudulent claim is paid—or worse, denied publicly without substantiated proof—it may erode policyholder trust, media relationships, and public credibility.

These impacts are felt most acutely in lines of business where reputation, identity, or media exposure drive exposure: professional liability, directors & officers (D&O), cyber, and certain casualty classes. Increasingly, even personal lines are seeing synthetic materials submitted to support questionable claims.

Insurers are now being forced to consider two uncomfortable realities: first, that traditional evidence is no longer inherently reliable, and second, that existing systems weren’t built to validate, track, or escalate these new forms of risk. Without structured workflows and cross-functional coordination, even the best-prepared carriers are adjusting blind.

The ambiguity is compounded when the synthetic content falls under the guise of satire or parody, categories that enjoy broad First Amendment protections. The South Park episode featuring a deepfaked Donald Trump parody is a prime example. While the intent was comedic, its timing, coinciding with the aforementioned defamation settlement, highlights the thin line between satire and reputational harm in the public eye.

From a claims standpoint, this raises difficult questions: if a satirical deepfake leads to a reputational fallout, does that trigger coverage? If parody sparks litigation and results in a quiet settlement, should it be treated like a standard defamation claim, even when the content was arguably protected speech? And critically, how do claims teams differentiate between malicious manipulation and cultural commentary when both may use the same synthetic media tools?

As synthetic content becomes both more prevalent and more protected, claims professionals must be able to separate editorial intent from evidentiary impact because even if a deepfake is “just a joke,” the legal, financial, and reputational consequences are anything but.

Operational Consequences for Claims Teams

These exposures translate into real operational strain. Claims managers must coordinate across legal, PR, forensic, and executive teams. They must also track non-monetary settlement terms long after the check is cut.

These pressures will likely drive-up costs. Carriers may need to invest in detection tech, expand investigative bandwidth, and retrain frontline staff to flag digital manipulation. As fraud becomes harder to detect, more will get through—and that will drive up both premiums and customer skepticism.

Even if no fraud occurs, a mishandled deepfake claim can damage a carrier’s brand and erode policyholder trust. In a media-driven era, perception is part of the liability.

ClaimDeck’s Solution

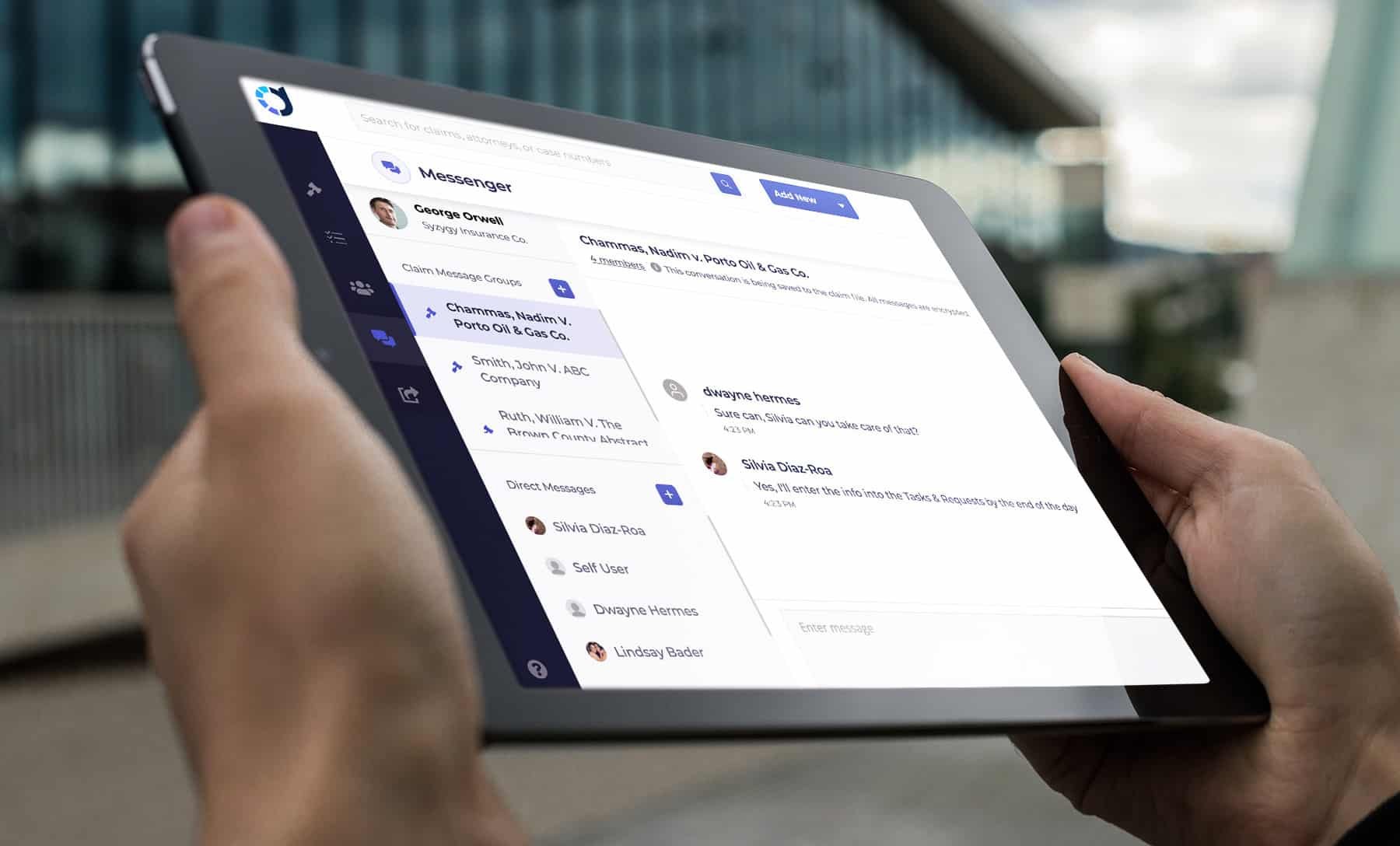

ClaimDeck acts as the connective tissue between claims teams and counsel when litigation begins. In cases involving synthetic media, satire, or reputational exposure, the real risk isn’t just the content itself, it’s the coordination failures that let confusion spiral into cost.

ClaimDeck centralizes evidence, filings, and communications so insurers can quickly establish a “single source of truth.” When potentially spoofed media surfaces—whether it’s a doctored video, a satirical deepfake, or a reputational flashpoint—teams need airtight chains of custody, clear records of decision-making, and instant alignment between adjusters, counsel, and communications leads. ClaimDeck’s structured, audit ready messaging ensures nothing gets lost in the noise, and every response is tied back to the matter at hand.

ClaimDeck’s structured collaboration means adjusters, counsel, and communications teams share the same evidence and analysis in real time. That reduces the lag between claim notice and a realistic view of exposure, letting insurers set reserves earlier and with more confidence.

For claims adjusters, this means less chasing, fewer surprises, and clear visibility into who’s doing what, by when. For executives, it may help limit exposure while also providing deeper insight into the downstream consequences of synthetic-media-driven claims—how they affect cycle times, settlement strategies, and reputational outcomes across the portfolio. In short, ClaimDeck doesn’t just streamline litigation, it inoculates against disinformation-driven chaos by replacing guesswork with traceable, defensible collaboration.

In a world where deepfakes blur fact and fiction, ClaimDeck brings structure, speed, and accountability to the resolution process. The claims landscape is evolving faster than legal precedent can keep up. With ClaimDeck, insurers don’t just react to changing litigation, they manage it.

Learn how ClaimDeck helps carriers cut case life by 200+ days, reduce spend, and make litigation AI-ready.

ClaimDeck™ eliminates claims litigation leakage for carriers while driving process into the law firm, modernizing the litigation process.

Follow ClaimDeck on LinkedIn.